JAXenter: Programming Alexa Skills with Python – how does it work? What is the basic procedure?

Francisco Rivas: Creating a Skill with Python is very similar to how it is done in NodeJS. One of the main differences is that you can either use decorators or classes; similarly to NodeJS for example, you need to implement handle and can_handle methods.

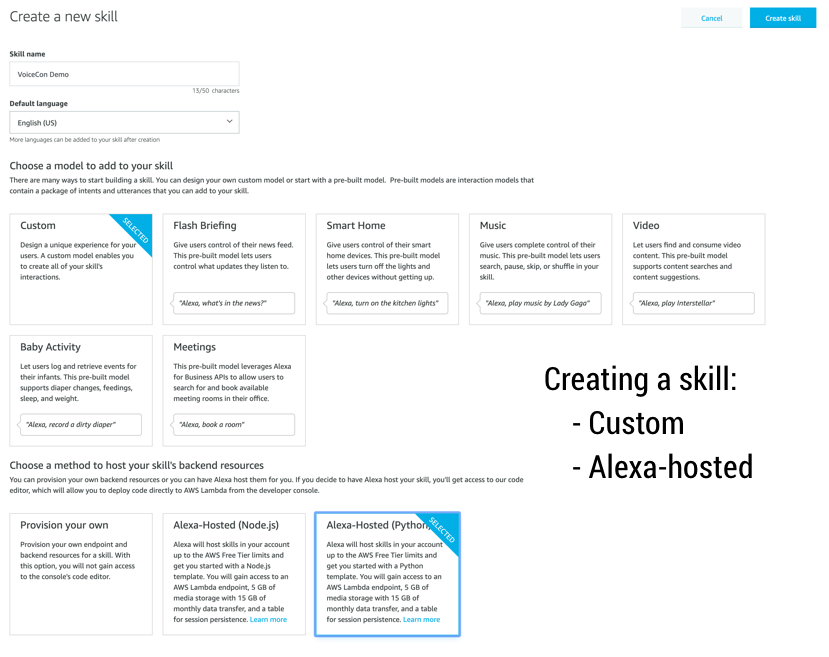

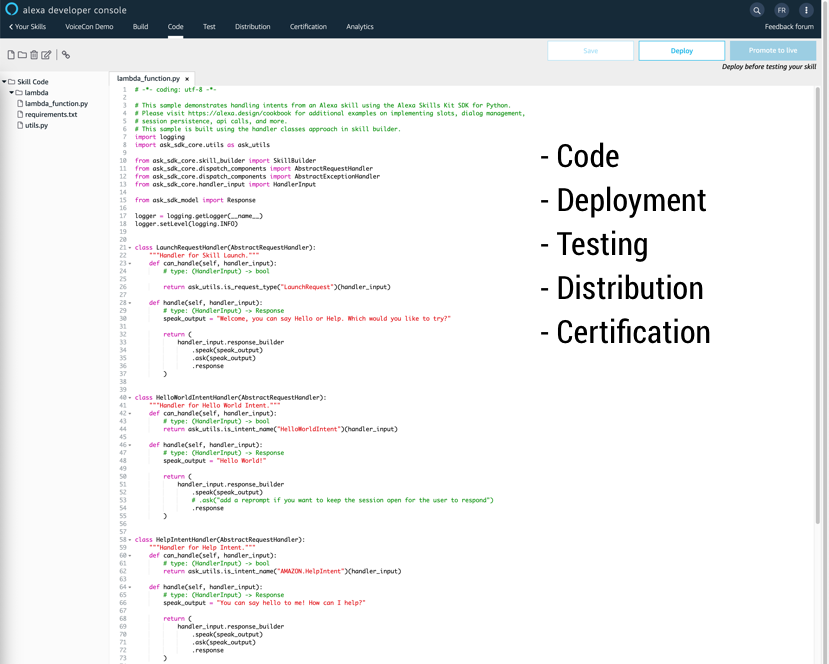

Regarding the procedure, first you need to create a skill in the Alexa Developer Console:

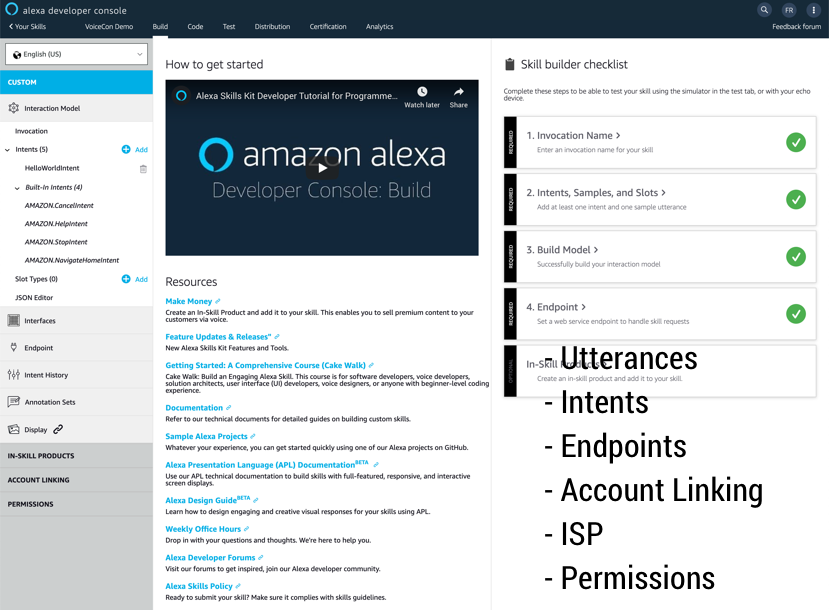

Then you have to create all the needed components, such as intents, utterances and so on:

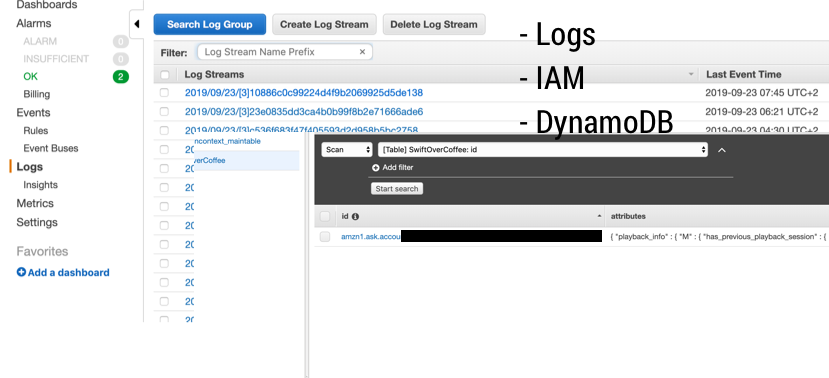

The next step is to create a lambda function with the permissions and access rights to the required resources (CloudWatch Logs, DynamoDB and so on):

And finally, you connect the skill to its lambda function:

For your development environment, depending on how you want to do it, you can either create a virtual environment or simply a folder with the Alexa Skills Kit (ASK) SDK for Python and the libraries you might additionally use inside. Once you have developed your lambda function, you need to deploy it and there are several ways of testing it.

JAXenter: What is so special about Python when it comes to Skills programming? Is more possible than with Java or Node.js?

Francisco Rivas: This is a very interesting question and objectively the advantage Python had was that, as Lambda runs in a Python environment, the cold start times were better for Python. That has changed this year, now the cold start for NodeJS has improved; however the warm run for Python is better. The code in Python is cleaner and more elegant, but comes with the language itself. I think that having an interactive console for Python also helps with testing specific parts of your code. As for Java, that’s a different story: Java’s cold start times are really high, there’s no comparison.

Learn more about Voice Conference:

The Voice Revolution

JAXenter: How do you see the domain of voice recognition in general? Is it a niche we currently occupy? Or are we facing a revolution in the interaction with technology?

We have already entered the realm of the voice enabled disruption.

Francisco Rivas: Voice is already changing how we interact with everything that is around us. It is changing and improving really fast and it is available to anyone. Any company can benefit from voice-enabled technologies either to provide value to their customers or themselves internally.

It is true that engagement and finding that “killer use case” are the main points of interest of companies. Voice is really versatile and fast for inputs. The entry barrier to use a device is basically non-existent, moreover voice has been our natural way of communicating like forever. It connects to our emotions and that is exactly one of the most important things to take into account when creating a voice experience, how we connect with our users. We have already entered the realm of the voice enabled disruption. More and more companies are investing (or at least realizing they need to invest) in voice-enabled technologies, some even are dedicating a specific budget to it. As of now, most likely companies use their marketing budgets to invest in a skill and it is not an integral part of their core business strategy. However, that is changing.

JAXenter: What technical challenges do we currently face in the field of speech recognition?

Francisco Rivas: One of the challenges is, when using voice cloud services, how to recover/handle the frustration of a user who has said something correctly, but the Skill received something else, causing as little friction as possible. Ways of helping Alexa to understand certain vocabulary better, for example, niche terms or jargon. Quality of audio files used in SSML. Mixing Alexa’s voice with SFX.

JAXenter: What is the core message of your session at VoiceCon?

Francisco Rivas: Go on and create a skill. No, seriously, create one. You can do it.

JAXenter: Thank you very much!

Meet Francisco Rivas at Voice Conference in his session: Creating an Alexa Skill with Python